My development team began adopting Extreme Programming (XP) in January 2004.

My development team began adopting Extreme Programming (XP) in January 2004.

Before this, we were hit and miss. Success relied on individuals. We had few shared practices. Our goal in going “Agile” was to consistently perform across projects.

“Agile” declares a set of common values and supportive practices. It fosters collaboration with customers and shared ownership within project teams.

In time, the team became proficient in core XP practices:

Planning

- User stories

- Iterative development

- Tracked velocity

- Daily stand-up meetings

- Regular retrospectives with continuous improvement

Designing

- Simple system metaphor

- Use of development spikes

- Refactoring

Coding

- Onsite customer

- Pair programming with switching

- Test driven development (TDD)

- Continuous integration

- Collective ownership

- Sustainable pace

Testing

- Extensive unit test coverage

- Bugs are resolved within the iteration

- Acceptance testing by the on-site customer

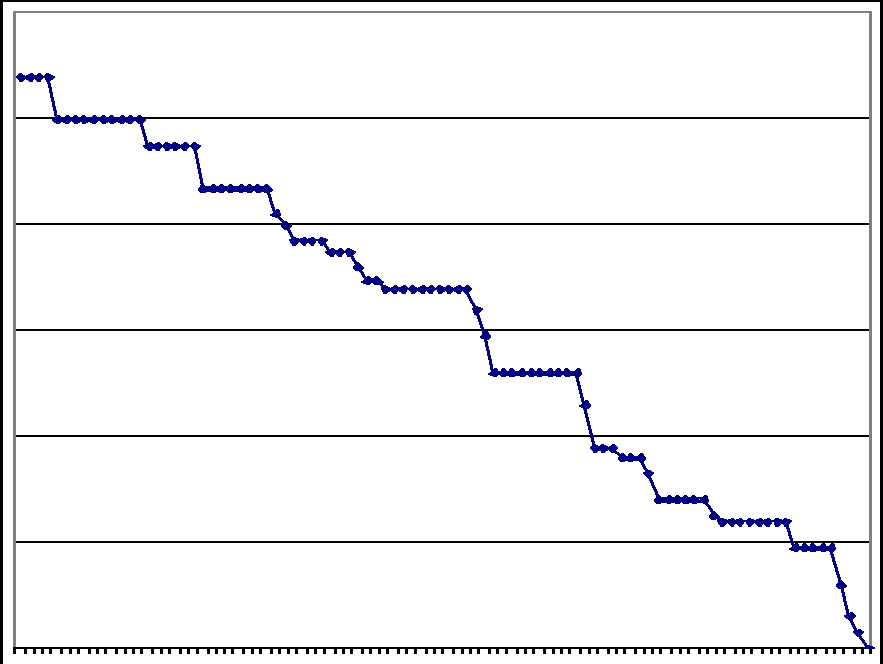

Within a year the team’s performance was more consistent and visible. We were measuring our velocity and predictably delivering on our 30 day iteration goals.

We discovered our project management practices had become a bottleneck. We were clearly hitting idle periods within and around projects because of a failure to efficiently describe and prioritize work.

We introduced Scrum as a management framework on top of XP. It provided practices for organizing and prioritizing work. It helped us define roles and responsibilities.

We clarified our expectations of internal clients and achieved more efficient interactions overall. We created mechanism for reporting progress and costs to senior management.

In Q1 06, the team’s practices were evaluated by an Agile Coach, Jason Lewis. Among his findings:

Oxygen Media’s Agile software development process overall rates above average and is better then the benchmark team. The benchmark did have considerably more Agile experience, but less time together as a team.

In the evaluation of practices, the team was overall: 1) well above average to outstanding in the adaptive learning practices, 2) Above average in Sprint practices and 3) Average in planning practices. High points for the team’s individual practices were the retrospective and use of the wall for iteration tracking. The one low point was the maturity of acceptance testing.When comparing roles to the benchmark team, the benchmark team had a much better customer role but the team was stronger in the developer and facilitator roles. When comparing the team’s adoption of the practice’s versus the benchmark the team was generally more effective. Iteration tracking was one key area the benchmark team was better, however, the team was much stronger in the all the adaptive learning practices.

After the audit, we pre-staged our iteration planning, reduced our iterations to 2 weeks and formally planned releases.

After the audit, we pre-staged our iteration planning, reduced our iterations to 2 weeks and formally planned releases.

We added discipline to our acceptance testing. We described acceptance tests in a narrative script authored by and exercised by our product owner (proxies).

We never automated acceptance tests for rich windows applications or systems tied to large, volatile back end data stores. But by Q3 2007, the team was using automated acceptance tests on it’s web applications.

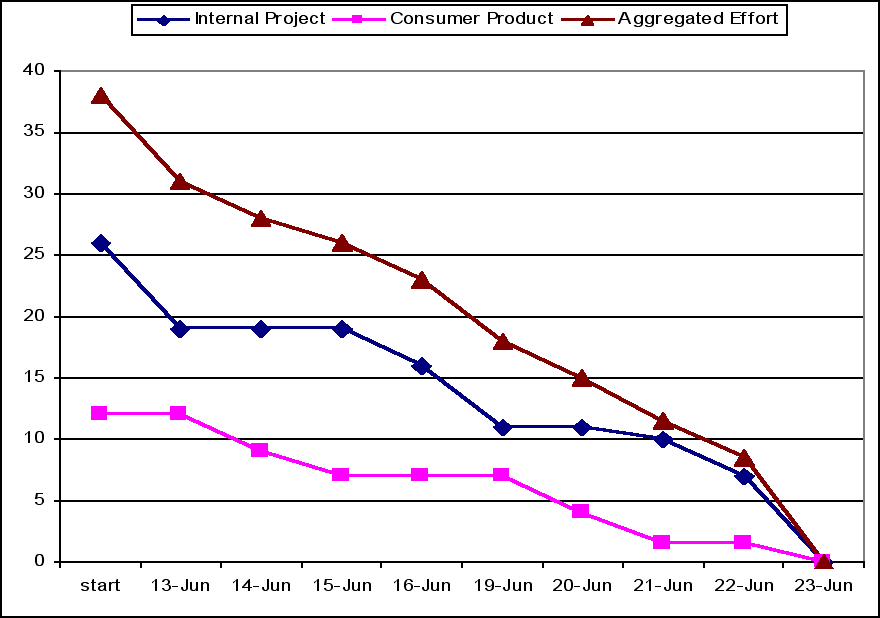

The most drastic improvement however was in the customer role. Scrum defines the responsibilities of the product owner. In our case, that role was divided into two individuals.

The product owner, is an empowered single authority for prioritizing business value at the feature level. They are usually are executive level and work in the business unit “funding” the work. They also have working knowledge of the system to be built. Product owners participate in planning and review, and are available for ad hoc questions within iterations.

The product owner proxy is a member of the development staff who acts as onsite proxy for the product owner. This person assists in authoring user stories and maintaining a product backlog, meets regularly with the product owner, and acts in their place to broker decisions within the development team during iterations.

By Q2 2007, the team had product owner proxies for both our IT and our consumer facing work. Product owners included the VP of Broadcast Operations, VP of Ad Sales Traffic, our CTO, and our CEO.

Throughout 2006-2007 our team performed exceptionally well, balancing two simultaneous lines of work and maintenance in both .NET and Ruby on Rails with four to six developers. Our projects delivered on client satisfaction, originality and early monetary goals.

Throughout 2006-2007 our team performed exceptionally well, balancing two simultaneous lines of work and maintenance in both .NET and Ruby on Rails with four to six developers. Our projects delivered on client satisfaction, originality and early monetary goals.

Team members raised their skills and began contributing to our field. They were writing, presenting and speaking at conferences on topics of scrum, XP and platform as well as contributing to open source projects and developer knowledge bases. We were drawing positive attention from our peer community and within our company.

Our consumer product, Ript™, was recognized for its elegance in design and implementation by members of Microsoft’s platform and developer evangelist team as well as by members of the WPF team. It also achieved high ratings in usability testing with end users (avg rating 8 of 10) and showed potential to deliver on its revenue targets.

At the end of 2007 our company was acquired by a much larger television company. Software we wrote for internal use is considered valuable enough by the acquirer that they are hoping to transition into their much larger operations.